Address Space

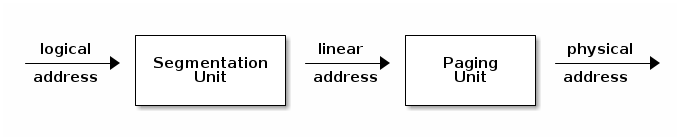

- x86 MMU

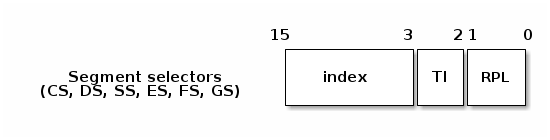

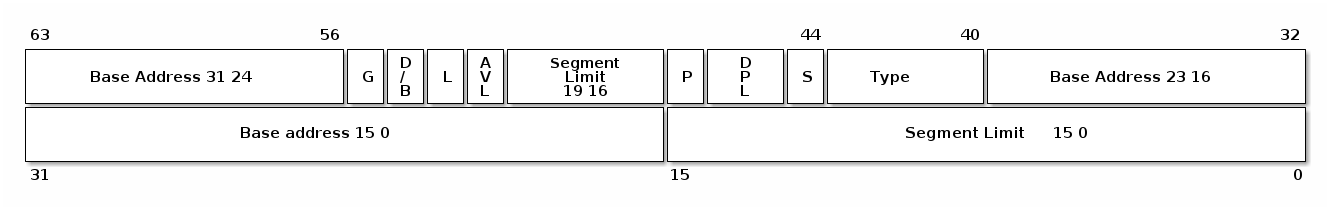

- Segmentation

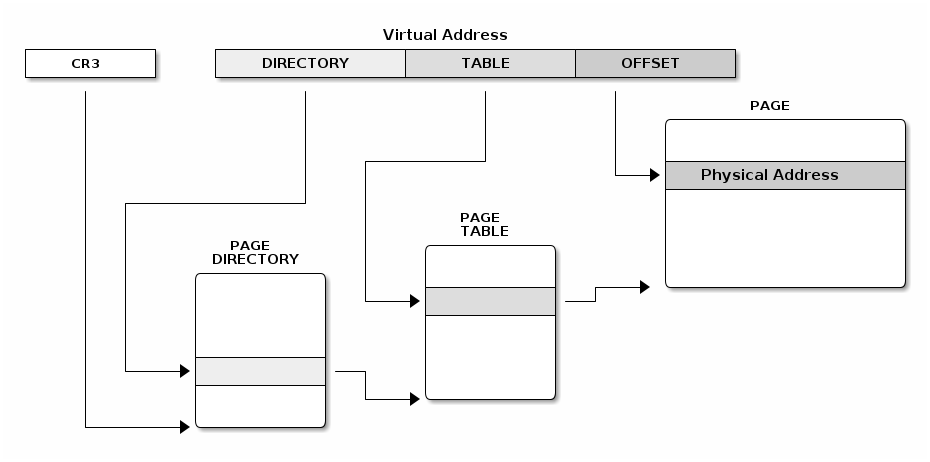

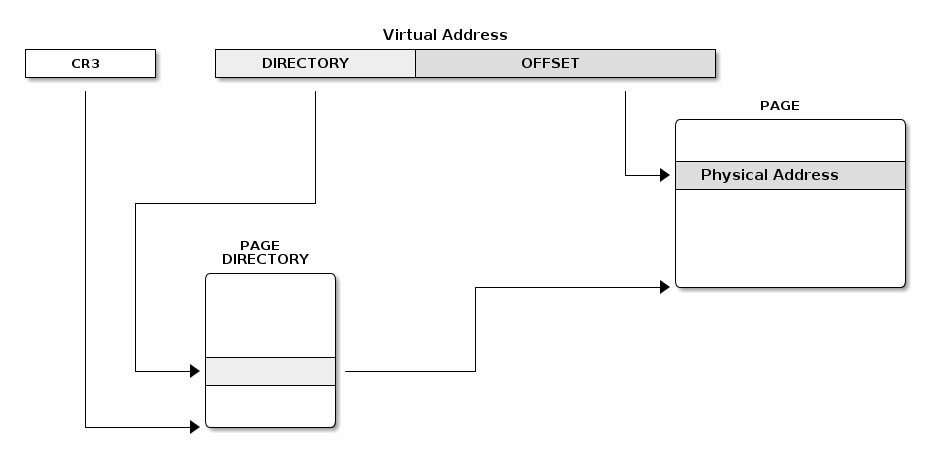

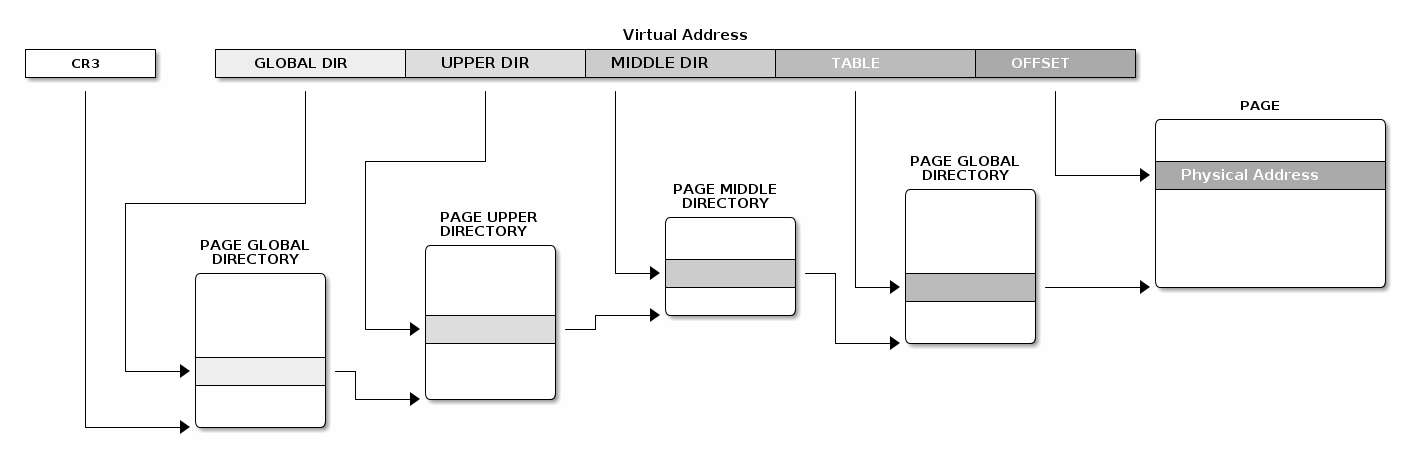

- Paging

- TLB

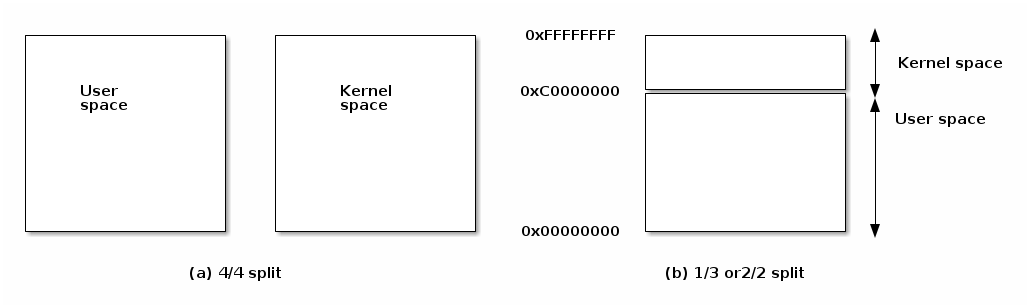

- Linux Address Space

- User

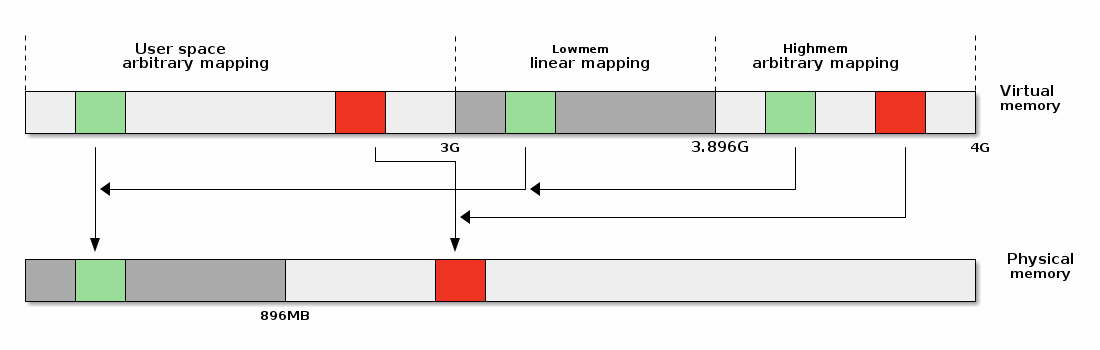

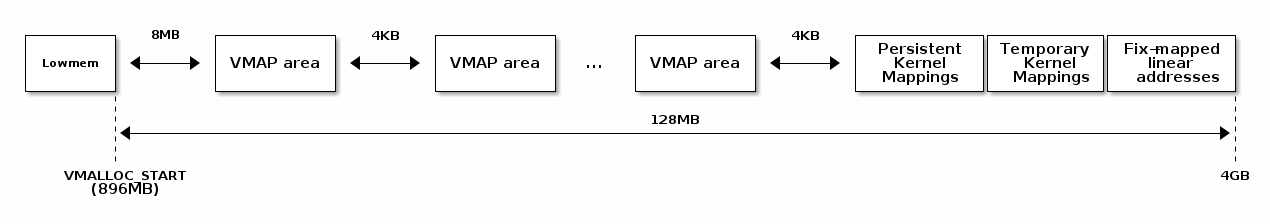

- Kernel

- High memory

/*

* The layout of the per-CPU GDT under Linux:

*

* 0 - null <=== cacheline #1

* 1 - reserved

* 2 - reserved

* 3 - reserved

*

* 4 - unused <=== cacheline #2

* 5 - unused

*

* ------- start of TLS (Thread-Local Storage) segments:

*

* 6 - TLS segment #1 [ glibc's TLS segment ]

* 7 - TLS segment #2 [ Wine's %fs Win32 segment ]

* 8 - TLS segment #3 <=== cacheline #3

* 9 - reserved

* 10 - reserved

* 11 - reserved

*

* ------- start of kernel segments:

*

* 12 - kernel code segment <=== cacheline #4

* 13 - kernel data segment

* 14 - default user CS

* 15 - default user DS

* 16 - TSS <=== cacheline #5

* 17 - LDT

* 18 - PNPBIOS support (16->32 gate)

* 19 - PNPBIOS support

* 20 - PNPBIOS support <=== cacheline #6

* 21 - PNPBIOS support

* 22 - PNPBIOS support

* 23 - APM BIOS support

* 24 - APM BIOS support <=== cacheline #7

* 25 - APM BIOS support

*

* 26 - ESPFIX small SS

* 27 - per-cpu [ offset to per-cpu data area ]

* 28 - stack_canary-20 [ for stack protector ] <=== cacheline #8

* 29 - unused

* 30 - unused

* 31 - TSS for double fault handler

*/

DEFINE_PER_CPU_PAGE_ALIGNED(struct gdt_page, gdt_page) = { .gdt = {

#ifdef CONFIG_X86_64

/*

* We need valid kernel segments for data and code in long mode too

* IRET will check the segment types kkeil 2000/10/28

* Also sysret mandates a special GDT layout

*

* TLS descriptors are currently at a different place compared to i386.

* Hopefully nobody expects them at a fixed place (Wine?)

*/

[GDT_ENTRY_KERNEL32_CS] = GDT_ENTRY_INIT(0xc09b, 0, 0xfffff),

[GDT_ENTRY_KERNEL_CS] = GDT_ENTRY_INIT(0xa09b, 0, 0xfffff),

[GDT_ENTRY_KERNEL_DS] = GDT_ENTRY_INIT(0xc093, 0, 0xfffff),

[GDT_ENTRY_DEFAULT_USER32_CS] = GDT_ENTRY_INIT(0xc0fb, 0, 0xfffff),

[GDT_ENTRY_DEFAULT_USER_DS] = GDT_ENTRY_INIT(0xc0f3, 0, 0xfffff),

[GDT_ENTRY_DEFAULT_USER_CS] = GDT_ENTRY_INIT(0xa0fb, 0, 0xfffff),

#else

[GDT_ENTRY_KERNEL_CS] = GDT_ENTRY_INIT(0xc09a, 0, 0xfffff),

[GDT_ENTRY_KERNEL_DS] = GDT_ENTRY_INIT(0xc092, 0, 0xfffff),

[GDT_ENTRY_DEFAULT_USER_CS] = GDT_ENTRY_INIT(0xc0fa, 0, 0xfffff),

[GDT_ENTRY_DEFAULT_USER_DS] = GDT_ENTRY_INIT(0xc0f2, 0, 0xfffff),

/*

* Segments used for calling PnP BIOS have byte granularity.

* They code segments and data segments have fixed 64k limits,

* the transfer segment sizes are set at run time.

*/

/* 32-bit code */

[GDT_ENTRY_PNPBIOS_CS32] = GDT_ENTRY_INIT(0x409a, 0, 0xffff),

/* 16-bit code */

[GDT_ENTRY_PNPBIOS_CS16] = GDT_ENTRY_INIT(0x009a, 0, 0xffff),

/* 16-bit data */

[GDT_ENTRY_PNPBIOS_DS] = GDT_ENTRY_INIT(0x0092, 0, 0xffff),

/* 16-bit data */

[GDT_ENTRY_PNPBIOS_TS1] = GDT_ENTRY_INIT(0x0092, 0, 0),

/* 16-bit data */

[GDT_ENTRY_PNPBIOS_TS2] = GDT_ENTRY_INIT(0x0092, 0, 0),

/*

* The APM segments have byte granularity and their bases

* are set at run time. All have 64k limits.

*/

/* 32-bit code */

[GDT_ENTRY_APMBIOS_BASE] = GDT_ENTRY_INIT(0x409a, 0, 0xffff),

/* 16-bit code */

[GDT_ENTRY_APMBIOS_BASE+1] = GDT_ENTRY_INIT(0x009a, 0, 0xffff),

/* data */

[GDT_ENTRY_APMBIOS_BASE+2] = GDT_ENTRY_INIT(0x4092, 0, 0xffff),

[GDT_ENTRY_ESPFIX_SS] = GDT_ENTRY_INIT(0xc092, 0, 0xfffff),

[GDT_ENTRY_PERCPU] = GDT_ENTRY_INIT(0xc092, 0, 0xfffff),

GDT_STACK_CANARY_INIT

#endif

} };

EXPORT_PER_CPU_SYMBOL_GPL(gdt_page);

struct * page;

pgd_t pgd;

pmd_t pmd;

pud_t pud;

pte_t pte;

void *laddr, *paddr;

pgd = pgd_offset(mm, vaddr);

pud = pud_offet(pgd, vaddr);

pmd = pmd_offset(pud, vaddr);

pte = pte_offset(pmd, vaddr);

page = pte_page(pte);

laddr = page_address(page);

paddr = virt_to_phys(laddr);

static inline pud_t * pud_offset(pgd_t * pgd,unsigned long address)

{

return (pud_t *)pgd;

}

static inline pmd_t * pmd_offset(pud_t * pud,unsigned long address)

{

return (pmd_t *)pud;

}

Single address invalidation:

mov $addr, %eax

invlpg %(eax)

Full invalidation:

mov %cr3, %eax

mov %eax, %cr3

void* vmalloc(unsigned long size);

void vfree(void * addr);

void *ioremap(unsigned long offset, unsigned size);

void iounmap(void * addr);

set_fixmap(idx, phys_addr)

set_fixmap_nocache(idx, phys_addr)

/*

* Here we define all the compile-time 'special' virtual

* addresses. The point is to have a constant address at

* compile time, but to set the physical address only

* in the boot process.

* for x86_32: We allocate these special addresses

* from the end of virtual memory (0xfffff000) backwards.

* Also this lets us do fail-safe vmalloc(), we

* can guarantee that these special addresses and

* vmalloc()-ed addresses never overlap.

*

* These 'compile-time allocated' memory buffers are

* fixed-size 4k pages (or larger if used with an increment

* higher than 1). Use set_fixmap(idx,phys) to associate

* physical memory with fixmap indices.

*

* TLB entries of such buffers will not be flushed across

* task switches.

*/

enum fixed_addresses {

#ifdef CONFIG_X86_32

FIX_HOLE,

#else

#ifdef CONFIG_X86_VSYSCALL_EMULATION

VSYSCALL_PAGE = (FIXADDR_TOP - VSYSCALL_ADDR) >> PAGE_SHIFT,

#endif

#endif

FIX_DBGP_BASE,

FIX_EARLYCON_MEM_BASE,

#ifdef CONFIG_PROVIDE_OHCI1394_DMA_INIT

FIX_OHCI1394_BASE,

#endif

#ifdef CONFIG_X86_LOCAL_APIC

FIX_APIC_BASE, /* local (CPU) APIC) -- required for SMP or not */

#endif

#ifdef CONFIG_X86_IO_APIC

FIX_IO_APIC_BASE_0,

FIX_IO_APIC_BASE_END = FIX_IO_APIC_BASE_0 + MAX_IO_APICS - 1,

#endif

#ifdef CONFIG_X86_32

FIX_KMAP_BEGIN, /* reserved pte's for temporary kernel mappings */

FIX_KMAP_END = FIX_KMAP_BEGIN+(KM_TYPE_NR*NR_CPUS)-1,

#ifdef CONFIG_PCI_MMCONFIG

FIX_PCIE_MCFG,

#endif

#define __fix_to_virt(x) (FIXADDR_TOP - ((x) << PAGE_SHIFT))

#define __virt_to_fix(x) ((FIXADDR_TOP - ((x)&PAGE_MASK)) >> PAGE_SHIFT)

#ifndef __ASSEMBLY__

/*

* 'index to address' translation. If anyone tries to use the idx

* directly without translation, we catch the bug with a NULL-deference

* kernel oops. Illegal ranges of incoming indices are caught too.

*/

static __always_inline unsigned long fix_to_virt(const unsigned int idx)

{

BUILD_BUG_ON(idx >= __end_of_fixed_addresses);

return __fix_to_virt(idx);

}

static inline unsigned long virt_to_fix(const unsigned long vaddr)

{

BUG_ON(vaddr >= FIXADDR_TOP || vaddr < FIXADDR_START);

return __virt_to_fix(vaddr);

}

inline long fix_to_virt(const unsigned int idx)

{

if (idx >= __end_of_fixed_addresses)

__this_fixmap_does_not_exist();

return (0xffffe000UL - (idx << PAGE_SHIFT));

}

kmap_atomic(), kunmap_atomic()#define kmap_atomic(page) kmap_atomic_prot(page, kmap_prot)

void *kmap_atomic_high_prot(struct page *page, pgprot_t prot)

{

unsigned long vaddr;

int idx, type;

type = kmap_atomic_idx_push();

idx = type + KM_TYPE_NR*smp_processor_id();

vaddr = __fix_to_virt(FIX_KMAP_BEGIN + idx);

BUG_ON(!pte_none(*(kmap_pte-idx)));

set_pte(kmap_pte-idx, mk_pte(page, prot));

arch_flush_lazy_mmu_mode();

return (void *)vaddr;

}

EXPORT_SYMBOL(kmap_atomic_high_prot);

static inline int kmap_atomic_idx_push(void)

{

int idx = __this_cpu_inc_return(__kmap_atomic_idx) - 1;

#ifdef CONFIG_DEBUG_HIGHMEM

WARN_ON_ONCE(in_irq() && !irqs_disabled());

BUG_ON(idx >= KM_TYPE_NR);

#endif

return idx;

}

kmap(), kunmap()