System Calls

- Linux system calls implementation

- VDSO and virtual syscalls

- Accessing user space from system calls

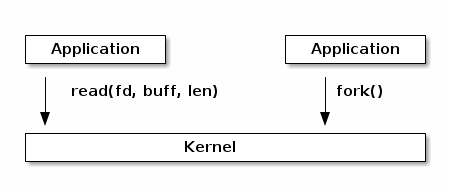

System Calls as Kernel services

System Call Setup

- setup information to identify the system call and its parameters

- trigger a kernel mode switch

- retrieve the result of the system call

Linux system call setup

- System calls are identified by numbers

- The parameters for system calls are machine word sized (32 or 64 bit) and they are limited to a maximum of 6

- Uses registers to store them both (e.g. for 32bit x86: EAX for system call and EBX, ECX, EDX, ESI, EDI, EBP for parameters)

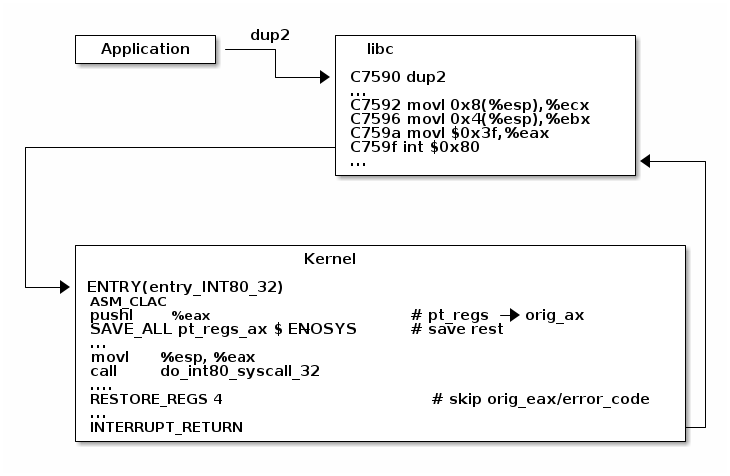

Example of Linux system call setup and handling

Linux System Call Dispatcher

/* Handles int $0x80 */

__visible void do_int80_syscall_32(struct pt_regs *regs)

{

enter_from_user_mode();

local_irq_enable();

do_syscall_32_irqs_on(regs);

}

/* simplified version of the Linux x86 32bit System Call Dispatcher */

static __always_inline void do_syscall_32_irqs_on(struct pt_regs *regs)

{

unsigned int nr = regs->orig_ax;

if (nr < IA32_NR_syscalls)

regs->ax = ia32_sys_call_table[nr](regs->bx, regs->cx,

regs->dx, regs->si,

regs->di, regs->bp);

syscall_return_slowpath(regs);

}

Inspecting dup2 system call

System Call Flow Summary

- The application is setting up the system call number and parameters and it issues a trap instruction

- The execution mode switches from user to kernel; the CPU switches to a kernel stack; the user stack and the return address to user space is saved on the kernel stack

- The kernel entry point saves registers on the kernel stack

- The system call dispatcher identifies the system call function and runs it

- The user space registers are restored and execution is switched back to user (e.g. calling IRET)

- The user space application resumes

System Call Table

#define __SYSCALL_I386(nr, sym, qual) [nr] = sym,

const sys_call_ptr_t ia32_sys_call_table[] = {

[0 ... __NR_syscall_compat_max] = &sys_ni_syscall,

#include <asm/syscalls_32.h>

};

__SYSCALL_I386(0, sys_restart_syscall, )

__SYSCALL_I386(1, sys_exit, )

#ifdef CONFIG_X86_32

__SYSCALL_I386(2, sys_fork, )

#else

__SYSCALL_I386(2, sys_fork, )

#endif

__SYSCALL_I386(3, sys_read, )

__SYSCALL_I386(4, sys_write, )

System Calls Pointer Parameters

- Never allow pointers to kernel-space

- Check for invalid pointers

Pointers to Kernel Space

- User access to kernel data if allowed in a write system call

- User corrupting kernel data if allowed in a read system call

Invalid pointers handling approaches

- Check the pointer against the user address space before using it, or

- Avoid checking the pointer and rely on the MMU to detect when the pointer is invalid and use the page fault handler to determine that the pointer was invalid

Page fault handling

- Copy on write, demand paging, swapping: both the fault and faulting addresses are in user space; the fault address is valid (checked against the user address space)

- Invalid pointer used in system call: the faulting address is in kernel space; the fault address is in user space and it is invalid

- Kernel bug (kernel accesses invalid pointer): same as above

Marking kernel code that accesses user space

- The exact instructions that access user space are recorded in a table (exception table)

- When a page fault occurs the faulting address is checked against this table

Cost analysis for pointer checks vs fault handling

| Cost | Pointer checks | Fault handling |

|---|---|---|

| Valid address | address space search | negligible |

| Invalid address | address space search | exception table search |

Virtual Dynamic Shared Object (VDSO)

- a stream of instructions to issue the system call is generated by the kernel in a special memory area (formatted as an ELF shared object)

- that memory area is mapped towards the end of the user address space

- libc searches for VDSO and if present will use it to issue the system call

Inspecting VDSO

Virtual System Calls (vsyscalls)

- "System calls" that run directly from user space, part of the VDSO

- Static data (e.g. getpid())

- Dynamic data update by the kernel a in RW map of the VDSO (e.g. gettimeofday(), time(), )

Accessing user space from system calls

/* OK: return -EFAULT if user_ptr is invalid */

if (copy_from_user(&kernel_buffer, user_ptr, size))

return -EFAULT;

/* NOK: only works if user_ptr is valid otherwise crashes kernel */

memcpy(&kernel_buffer, user_ptr, size);

get_user implementation

#define get_user(x, ptr) \

({ \

int __ret_gu; \

register __inttype(*(ptr)) __val_gu asm("%"_ASM_DX); \

__chk_user_ptr(ptr); \

might_fault(); \

asm volatile("call __get_user_%P4" \

: "=a" (__ret_gu), "=r" (__val_gu), \

ASM_CALL_CONSTRAINT \

: "0" (ptr), "i" (sizeof(*(ptr)))); \

(x) = (__force __typeof__(*(ptr))) __val_gu; \

__builtin_expect(__ret_gu, 0); \

})

get_user pseudo code

#define get_user(x, ptr) \

movl ptr, %eax \

call __get_user_1 \

movl %edx, x \

movl %eax, result \

get_user_1 implementation

.text

ENTRY(__get_user_1)

mov PER_CPU_VAR(current_task), %_ASM_DX

cmp TASK_addr_limit(%_ASM_DX),%_ASM_AX

jae bad_get_user

ASM_STAC

1: movzbl (%_ASM_AX),%edx

xor %eax,%eax

ASM_CLAC

ret

ENDPROC(__get_user_1)

bad_get_user:

xor %edx,%edx

mov $(-EFAULT),%_ASM_AX

ASM_CLAC

ret

END(bad_get_user)

_ASM_EXTABLE(1b,bad_get_user)

Exception table entry

/* Exception table entry */

# define _ASM_EXTABLE_HANDLE(from, to, handler) \

.pushsection "__ex_table","a" ; \

.balign 4 ; \

.long (from) - . ; \

.long (to) - . ; \

.long (handler) - . ; \

.popsection

# define _ASM_EXTABLE(from, to) \

_ASM_EXTABLE_HANDLE(from, to, ex_handler_default)

Exception table building

#define EXCEPTION_TABLE(align) \

. = ALIGN(align); \

__ex_table : AT(ADDR(__ex_table) - LOAD_OFFSET) { \

VMLINUX_SYMBOL(__start___ex_table) = .; \

KEEP(*(__ex_table)) \

VMLINUX_SYMBOL(__stop___ex_table) = .; \

}

Exception table handling

bool ex_handler_default(const struct exception_table_entry *fixup,

struct pt_regs *regs, int trapnr)

{

regs->ip = ex_fixup_addr(fixup);

return true;

}

int fixup_exception(struct pt_regs *regs, int trapnr)

{

const struct exception_table_entry *e;

ex_handler_t handler;

e = search_exception_tables(regs->ip);

if (!e)

return 0;

handler = ex_fixup_handler(e);

return handler(e, regs, trapnr);

}