System Calls

- Linux system calls implementation

- VDSO and virtual syscalls

- Accessing user space from system calls

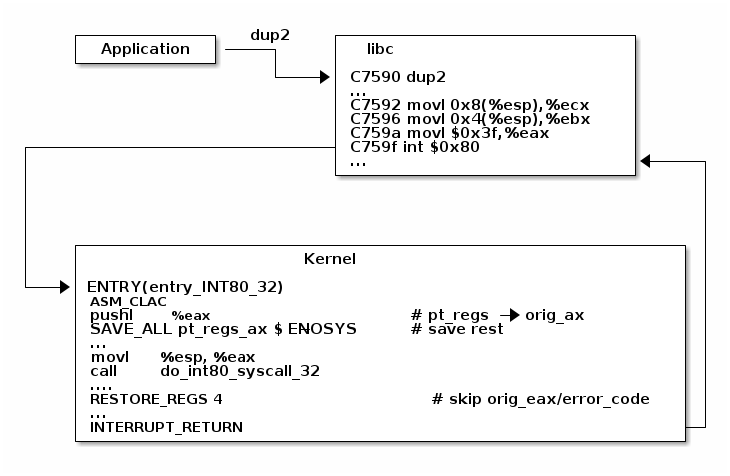

/* Handles int $0x80 */

__visible void do_int80_syscall_32(struct pt_regs *regs)

{

enter_from_user_mode();

local_irq_enable();

do_syscall_32_irqs_on(regs);

}

/* simplified version of the Linux x86 32bit System Call Dispatcher */

static __always_inline void do_syscall_32_irqs_on(struct pt_regs *regs)

{

unsigned int nr = regs->orig_ax;

if (nr < IA32_NR_syscalls)

regs->ax = ia32_sys_call_table[nr](regs->bx, regs->cx,

regs->dx, regs->si,

regs->di, regs->bp);

syscall_return_slowpath(regs);

}

#define __SYSCALL_I386(nr, sym, qual) [nr] = sym,

const sys_call_ptr_t ia32_sys_call_table[] = {

[0 ... __NR_syscall_compat_max] = &sys_ni_syscall,

#include <asm/syscalls_32.h>

};

__SYSCALL_I386(0, sys_restart_syscall)

__SYSCALL_I386(1, sys_exit)

__SYSCALL_I386(2, sys_fork)

__SYSCALL_I386(3, sys_read)

__SYSCALL_I386(4, sys_write)

#ifdef CONFIG_X86_32

__SYSCALL_I386(5, sys_open)

#else

__SYSCALL_I386(5, compat_sys_open)

#endif

__SYSCALL_I386(6, sys_close)

- Copy on write, demand paging, swapping: both the fault and faulting addresses are in user space; the fault address is valid (checked against the user address space)

- Invalid pointer used in system call: the faulting address is in kernel space; the fault address is in user space and it is invalid

- Kernel bug (kernel accesses invalid pointer): same as above

| Cost | Pointer checks | Fault handling |

|---|---|---|

| Valid address | address space search | negligible |

| Invalid address | address space search | exception table search |

/* OK: return -EFAULT if user_ptr is invalid */

if (copy_from_user(&kernel_buffer, user_ptr, size))

return -EFAULT;

/* NOK: only works if user_ptr is valid otherwise crashes kernel */

memcpy(&kernel_buffer, user_ptr, size);

#define get_user(x, ptr) \

({ \

int __ret_gu; \

register __inttype(*(ptr)) __val_gu asm("%"_ASM_DX); \

__chk_user_ptr(ptr); \

might_fault(); \

asm volatile("call __get_user_%P4" \

: "=a" (__ret_gu), "=r" (__val_gu), \

ASM_CALL_CONSTRAINT \

: "0" (ptr), "i" (sizeof(*(ptr)))); \

(x) = (__force __typeof__(*(ptr))) __val_gu; \

__builtin_expect(__ret_gu, 0); \

})

#define get_user(x, ptr) \

movl ptr, %eax \

call __get_user_1 \

movl %edx, x \

movl %eax, result \

.text

ENTRY(__get_user_1)

mov PER_CPU_VAR(current_task), %_ASM_DX

cmp TASK_addr_limit(%_ASM_DX),%_ASM_AX

jae bad_get_user

ASM_STAC

1: movzbl (%_ASM_AX),%edx

xor %eax,%eax

ASM_CLAC

ret

ENDPROC(__get_user_1)

bad_get_user:

xor %edx,%edx

mov $(-EFAULT),%_ASM_AX

ASM_CLAC

ret

END(bad_get_user)

_ASM_EXTABLE(1b,bad_get_user)

/* Exception table entry */

# define _ASM_EXTABLE_HANDLE(from, to, handler) \

.pushsection "__ex_table","a" ; \

.balign 4 ; \

.long (from) - . ; \

.long (to) - . ; \

.long (handler) - . ; \

.popsection

# define _ASM_EXTABLE(from, to) \

_ASM_EXTABLE_HANDLE(from, to, ex_handler_default)

#define EXCEPTION_TABLE(align) \

. = ALIGN(align); \

__ex_table : AT(ADDR(__ex_table) - LOAD_OFFSET) { \

VMLINUX_SYMBOL(__start___ex_table) = .; \

KEEP(*(__ex_table)) \

VMLINUX_SYMBOL(__stop___ex_table) = .; \

}

bool ex_handler_default(const struct exception_table_entry *fixup,

struct pt_regs *regs, int trapnr)

{

regs->ip = ex_fixup_addr(fixup);

return true;

}

int fixup_exception(struct pt_regs *regs, int trapnr)

{

const struct exception_table_entry *e;

ex_handler_t handler;

e = search_exception_tables(regs->ip);

if (!e)

return 0;

handler = ex_fixup_handler(e);

return handler(e, regs, trapnr);

}